Before we take you into the amazing world of digital twins, what they are and their immense potential for banks, here’s a taster of the pivotal role the digital twin technology per se is already playing in the digitalisation of a range of sectors e.g.,

- Rolls Royce run engine analytics in-flight

- Unilever reduces false AI alerts

- Mercedes wins F1 races

- Marks & Spencer optimize store layouts

- NASA maintain & repair space stations

- Nevada manages water supply

- Tesla updates s/ware per car daily, &

- Singapore manages climate & traffic

It is not surprising that the digital twin market is set to grow at a mind-boggling 58% CAGR to $48Bn by 2026 and $106Bn by 2028, while others are saying that Digital Twins represent the ‘third wave’ of the internet, after search and social media.

So, what is a digital twin & what does it have to do with a Bank?

Gartner defines digital twins as a design pattern for a new class of enterprise software components that produce a digital proxy for a thing, person or process. Deloitte explains it as an evolving digital profile of the historical and current behavior of a thing, person or process.

The 4 words – digital, proxy, historical, current – are key to fully understanding the power and value of digital twins to a bank.

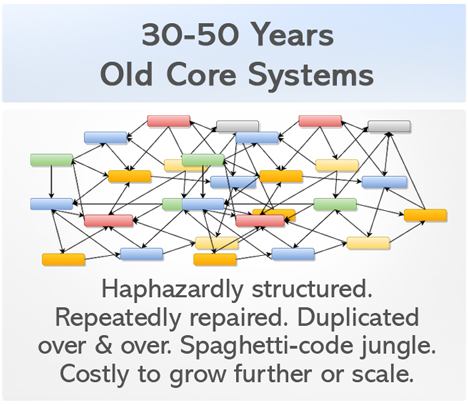

Digital twin technology treats legacy systems as a ‘thing’, and we know that these legacy systems at most banks are typically:

Over decades, intricate pieces of custom code have gotten embedded deep inside them, may be 4-5-6-7 layers down! To make things worse, these systems are frustratingly inflexible, mostly batch-based, and siloed.

It is often less of an intentional architecture and tends to mirror organizational silos. Some are built and owned in-house while others are provided by 3rd parties.

This architectural and data log-jam situation is where digital twins truly come to play. It’s what they are built to solve, where they shine most!

By design, a digital twin consists of 3 distinct parts:

- the legacy or the current system,

- the digital system, and

- connections between the two platforms

This means that in a bank, a digital twin allows data to flow across back and front, new and old, static and transactional. Importantly, both upstream and downstream.

At its very basic, the core function of a digital twin is to speed-up –

• access to data

• flow of data, and

• application of data

So, why does a bank need a digital twin?

Here are a bunch of digital transformation challenges that banks feel boxed-in to:

- Long customer application dev cycles

- Low support for new-age biz models

- API & data exposure to channels hard

- Giving batch + real-time data for AI/ML

- Developing a single view of customer

- High costs of core bank replacement

- Difficulty and risks in system migration

- Hurdles to progressive modernization

- High MIPS costs, queries hit mainframes

- Neo-banks serve clients at 1/3rd cost

- Big Tech + FinTech taking market share

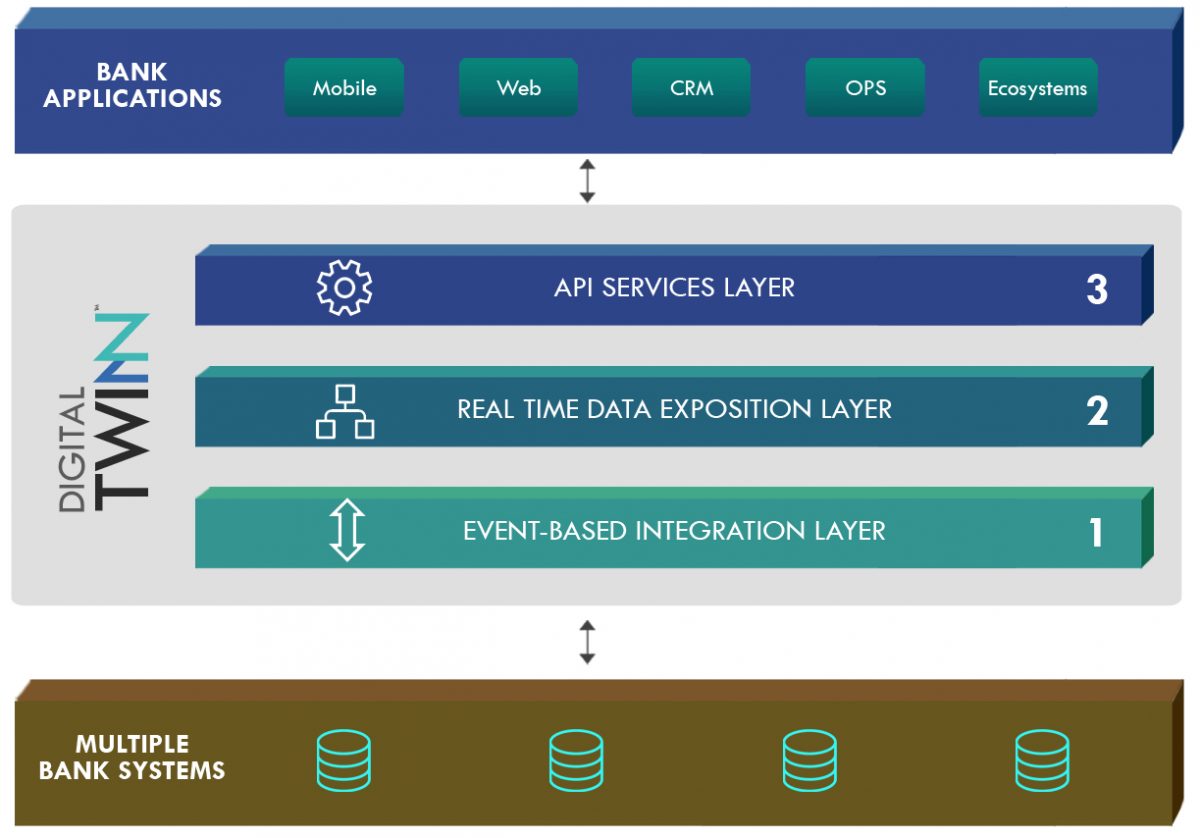

How does the Digital TWINN™ fit inside a bank’s systems?

First, it is critically important to note that the Digital TWINN™ is a non-intrusive solution. This design principle significantly eases the barriers to on-boarding and adoption in a bank.

It is a living and breathing representation of a bank’s customer data as it changes in line with real-time events. Via APIs, this is can be queried, or updated, or new data can be created too.

The TWINN™ comes fitted with 3 key functional components.

1. Event-based Integration Layer

Thru a variety of non-intrusive patterns including web hooks, real-time messaging and database connectors, a replica of data from across a bank’s data sources is created. This event-based mechanism then allows for high performance, large scale CRUD operations to be performed while reducing the workload (both cost and time) on the bank’s system of records.

2. Data Exposition Layer

This layer enables data to be optimally exposed as APIs from just a single endpoint. This layer comprises of a:

- Transact Store (for real-time data), & an

- Analytics Store (for historical data).

Data is mapped from underlying bank systems to the TWINN’s canonical data model (CDM) using BAIN/ISO 20022 financial services industry standards, and then abstracted.

The CDM enables every application to translate its data into a single, common model that all other applications understand.

This in-turn helps the bank to perform fewer translations, improve translation maintenance, and improve logic maintenance too.

3. API Services Layer

The TWINN™ comes with a rich and growing library of pre-built APIs that enable faster go-to-market for banks, especially when budgets, time or skills are in short supply.

What can a bank do with its Digital TWINN™?

A lot.

Needless to say, our team at Percipient will be more than happy to assist should you need to talk to us.

Team Percipient